Have you ever hummed a melody in the shower and wished you could transform it into a full-fledged song? Or scrolled through royalty-free music libraries for hours, only to settle for something that’s “close enough” but never quite captures your vision? I’ve been there—staring at my video project at 2 AM, desperately needing the perfect soundtrack but lacking both the budget and the musical expertise to create it.

That frustration led me down a rabbit hole of AI music creation tools, and what I discovered changed how I approach content creation entirely.

The AI Song Generator landscape has evolved from producing robotic-sounding loops to crafting compositions that genuinely surprised me with their emotional depth and production quality. But like any emerging technology, the experience isn’t always seamless—and understanding what these tools can (and can’t) do makes all the difference.

The Creative Bottleneck We All Face

Traditional music production demands three scarce resources: time, money, and specialized skills. Hiring a composer costs anywhere from $500 to $5,000 per track. Learning music production software takes months of dedicated practice. Even purchasing pre-made tracks from stock libraries adds up quickly when you need variety and exclusivity.

For independent creators, small business owners, and content producers, this creates an impossible choice: compromise your creative vision or blow your budget on a single audio element.

How AI Music Generation Actually Works (In My Experience)

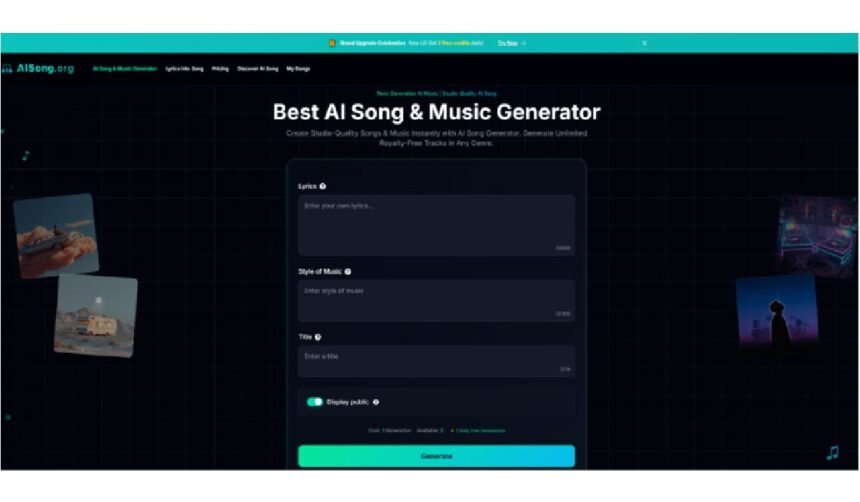

After testing various platforms over the past six months, I’ve learned that AI music generation operates on a simple but powerful principle: you describe what you want, and the system interprets your intent through natural language processing combined with trained musical pattern recognition.

In my tests with different tools, the process typically unfolds in three stages:

Input Phase: You provide either a text description (“upbeat electronic track with 80s synth vibes”) or actual lyrics you want set to music. The specificity of your prompt dramatically affects the output quality—I found that vague descriptions like “happy song” produced generic results, while detailed prompts yielded surprisingly nuanced compositions.

Generation Phase: The AI analyzes millions of musical patterns it learned during training, constructing melodies, harmonies, chord progressions, and rhythmic structures that match your specifications. This usually takes 30 seconds to 2 minutes, though I’ve occasionally waited up to 5 minutes during peak usage times.

Refinement Phase: Here’s where reality diverges from marketing promises—you’ll likely need multiple generations to achieve your desired result. In my experience, I typically generate 3-5 variations before finding one that truly fits my project. This isn’t a flaw; it’s simply the nature of creative AI tools that interpret prompts probabilistically rather than deterministically.

Real-World Capabilities vs. Limitations

Let me be honest about what I’ve observed across dozens of generated tracks:

| Aspect | What Works Well | Where It Still Struggles |

| Genre Versatility | Pop, electronic, lo-fi, ambient, and acoustic styles sound convincingly authentic | Complex jazz improvisation and orchestral arrangements can feel somewhat formulaic |

| Vocal Quality | Clear pronunciation, decent emotional inflection in major languages | Occasional awkward phrasing; non-English vocals vary significantly in quality |

| Production Value | Surprisingly professional mixing and mastering that rivals mid-tier studio work | Subtle artifacts sometimes appear in busy arrangements with multiple instruments |

| Lyrical Coherence | Maintains thematic consistency and proper song structure (verse-chorus-bridge) | Can produce clichéd phrases; works best when you provide your own lyrics |

| Customization Control | Excellent at capturing mood, tempo, and general style from descriptions | Limited ability to specify exact musical elements like “add a guitar solo at 1:32” |

The Practical Advantages I’ve Discovered

Speed That Transforms Workflows: What used to take me three days of searching, licensing negotiations, and audio editing now happens in under an hour. For a recent product launch video, I generated five different background tracks in a single afternoon, allowing my team to choose the perfect sonic identity for our brand.

Genuine Royalty-Free Ownership: This aspect deserves emphasis because it solves a genuine pain point. Every track I’ve generated comes with full commercial rights—no attribution requirements, no usage limits, no surprise DMCA claims months later. I’ve used AI-generated music in client projects, YouTube videos, and podcast intros without a single licensing headache.

Experimentation Without Financial Risk: Before AI tools, testing different musical directions meant commissioning multiple tracks or purchasing various licenses. Now I can explore ten different genre approaches for the same project at virtually no cost, making creative decisions based on what works rather than what I can afford.

Who Benefits Most From This Technology?

Through conversations with other creators and my own use cases, certain profiles emerge as ideal candidates:

Content Creators: YouTubers, podcasters, and social media producers who need fresh, unique audio for every project without recycling the same stock tracks everyone recognizes.

Independent Filmmakers: Low-budget productions that need original scores but can’t afford traditional composers. In my testing, AI-generated ambient and tension-building tracks worked particularly well for short films.

Marketing Teams: Agencies and in-house marketers creating multiple campaigns simultaneously. The ability to generate brand-specific sonic identities quickly proved invaluable for A/B testing different emotional approaches.

Aspiring Musicians: Songwriters who have lyrics and melodies in their heads but lack the technical skills to produce demos. While purists might scoff, I’ve seen talented lyricists use AI generation as a sketching tool before working with human producers.

The Learning Curve and Prompt Engineering

Here’s something most platforms don’t emphasize: getting great results requires developing what I call “musical prompt literacy.” My early attempts produced serviceable but uninspiring tracks because I approached prompts too literally.

The breakthrough came when I started thinking like a music producer describing a vision to session musicians. Instead of “sad piano song,” I’d write “melancholic piano ballad with sparse arrangement, reminiscent of a rainy afternoon, tempo around 65 BPM, minor key with subtle string accompaniment building in the second half.”

That level of detail—painting a scene, specifying technical parameters, and referencing emotional contexts—consistently produced results that felt intentional rather than random.

Comparing Traditional vs. AI Music Creation

| Factor | Traditional Production | AI Song |

| Time Investment | 2-6 weeks from brief to final delivery | 30 minutes to 2 hours including iterations |

| Cost Range | $500-$5,000+ per track | Free to Start |

| Skill Requirements | Years of musical training or hiring experts | Ability to describe your vision clearly |

| Customization Depth | Unlimited—every element controllable | Moderate—general direction with some unpredictability |

| Emotional Authenticity | Human nuance and intentionality | Surprisingly effective but occasionally formulaic |

| Revision Flexibility | Expensive and time-consuming | Instant—generate new variations freely |

Final Thoughts: A Tool, Not a Replacement

After six months of regular use, I view AI music generation as I do photography: smartphone cameras haven’t made professional photographers obsolete, but they’ve democratized visual storytelling for millions of people who would never invest in DSLR equipment and Lightroom training.

Similarly, AI-generated music won’t replace Hans Zimmer scoring the next blockbuster, but it empowers creators like me—people with musical ideas but without conservatory training—to bring our visions to life. The technology works best when you understand its strengths (speed, variety, accessibility) and limitations (occasional need for multiple attempts, less control over micro-details).

For anyone who’s ever felt limited by the audio options available to them, exploring what modern AI music generation can do feels less like using a tool and more like unlocking a creative capability you didn’t know you had. The barrier between imagination and execution has never been lower—and that shift is worth paying attention to.